How synthetic datasets reveal true identities

If 2022 was the year when the disruptive potential of generative AI first captured widespread public attention, then 2023 is the year when the legitimacy of its foundational data became a focal point for businesses eager to harness its power.

The doctrine of fair use in the United States, along with the implicit academic license that has long allowed academic and commercial research sectors to explore generative artificial intelligence, is becoming increasingly untenable as more evidence of plagiarism surfaces.

Therefore, the United States temporarily does not allow copyright protection for content generated by artificial intelligence.

These issues are far from resolved and far from being immediately resolved; in the year, partly due to growing concerns among the media and the public about the legal status of AI-generated outputs, the U.S. Copyright Office launched a multi-year investigation into this aspect of generative AI, releasing the first part (concerning digital reproductions) in the month of the year.

https://copyright.gov/ai/Copyright-and-Artificial-Intelligence-Part-1-Digital-Replicas-Report.pdf

Meanwhile, business interest groups remain frustrated as the expensive models they wish to leverage could expose them to legal repercussions once clear legislation and definitions are finally enacted.

An expensive short-term solution is to legitimize generative models by training them on data that the company is legally entitled to use. The text-to-image (now text-to-video) architecture is primarily supported by the image library purchased in [year], supplemented with data from the public domain where copyright has expired.

At the same time, current stock photo suppliers such as and have leveraged the new value of their licensed data by authorizing content through increasing transactions or developing their own systems that comply with standards.

composite solution

Due to the complexities involved in removing copyrighted data from the latent space of a trained AI model, companies attempting to use machine learning for consumer and commercial solutions may face significant costs from errors in this area.

For computer vision systems (and large language models, or LLMs), an alternative, more cost-effective solution is to use synthetic data, where datasets are composed of randomly generated examples from the target domain (such as faces, cats, churches, or even more general datasets).

Websites like . have long promoted the idea that it is possible to synthesize photos of "unreal" people that look authentic (in this specific case, through the use of Generative Adversarial Networks (GANs)), without any connection to actual people in the real world.

Therefore, if facial recognition systems or generative systems are trained on these abstract, non-real examples, theoretically, it is possible to achieve photo-realistic productivity standards for the model without considering whether the data is legally used.

balance act

The issue is that the systems generating synthetic data are themselves trained on real data.

If traces of these data permeate the synthetic data, it may prove that restrictive or unauthorized materials have been exploited for profit.

To avoid this and to generate truly "random" images, such models need to ensure they have good generalization capabilities.

Generalization ability is the standard for measuring the capability of a trained artificial intelligence model to fundamentally understand advanced concepts such as "face," "man," or "woman" without replicating the actual training data.

Unfortunately, unless the dataset is extensively trained, the trained system struggles to generate (or recognize) details. This puts the system at risk of memorization: to some extent, it tends to reproduce examples from the actual training data.

This situation can be mitigated by setting a more relaxed learning rate, or by ending training at a stage where the core concepts remain malleable and are not tied to any specific data point (e.g., a particular image of a person in the case of a facial dataset).

However, both of these remedies may result in insufficiently detailed models, as the system does not have the opportunity to go beyond the "basics" of the target domain and delve into the details.

Therefore, in scientific literature, very high learning rates and comprehensive training programs are commonly employed.

Although researchers typically attempt to strike a balance between the broad applicability and granularity of the final model, systems that even slightly "memorize" often mistakenly believe they possess good generality—even in initial tests.

Face revealed

This brings us to an interesting new paper from Switzerland, which claims to have demonstrated for the first time the ability to recover the original real image used to synthesize data from theoretically completely random generated images:

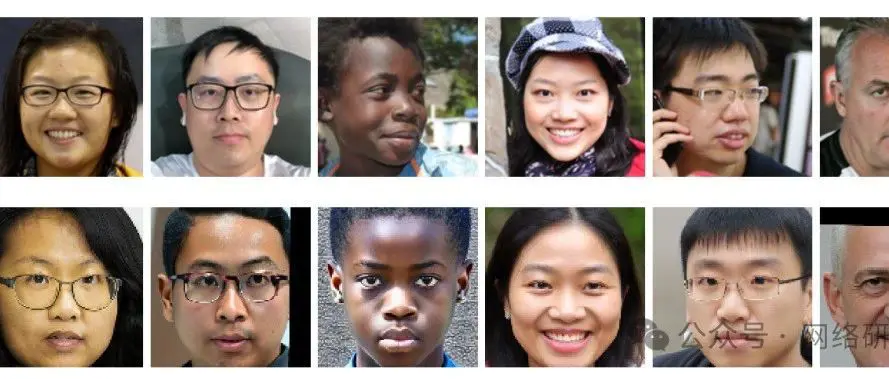

Example face images leaked from the training data. In the top row, we see the original (real) images; in the bottom row, we see randomly generated images that are clearly consistent with the real images.

The author believes that the results indicate that the "synthetic" generator indeed memorized a large number of training data points, seeking greater granularity. They also point out that systems relying on synthetic data to protect AI producers from legal consequences may be highly unreliable in this regard.

Researchers conducted extensive studies on six of the most advanced synthetic datasets, demonstrating that the original (possibly copyrighted or protected) data can be recovered in all cases.

They commented:

Our experiments show that the state-of-the-art synthetic face recognition datasets contain samples that are very close to those in the training data of their generator models. In some cases, the synthetic samples undergo minor alterations to the original images, but we also observe that in certain instances, the generated samples include more variations (such as different poses, lighting conditions, etc.) while still preserving the identity.

This indicates that the generator model is learning and memorizing identity-related information from the training data, and may generate similar identities. This raises serious concerns about the application of synthetic data in privacy-sensitive tasks such as biometrics and facial recognition.

This paper is titled "Unveiling Synthetic Faces: How Synthetic Datasets Expose Real Identities."

https://arxiv.org/pdf/2410.24015

Written by two researchers from the Martigny Institute, École Polytechnique Fédérale de Lausanne (EPFL), and the University of Lausanne (UNIL).

Methods, Data, and Results

The faces of memory in the study are revealed through membership inference attacks. Although this concept may sound complex, it is quite self-explanatory: in this context, inferring membership refers to the process of questioning the system until it reveals data that matches or closely resembles the data you are looking for.

More examples of inferred data sources from the study. In this case, the source synthetic images are from the dataset.

Researchers studied six synthetic datasets, the (true) origins of which are known. Given the large number of images in both the real and fake datasets involved, this is essentially like finding a needle in a haystack.

Therefore, the author utilized an off-the-shelf facial recognition model†, which serves as the backbone, and was trained on the loss function (on the dataset).

The six synthetic datasets used are: (Latent Diffusion Model); - (Diffusion Model based on ); - (- Variants using different sampling methods); (Based on Generative Adversarial Networks and Diffusion Models, using generated initial identities, then creating various examples); (A method based on -); and (Identity Protection Framework).

Due to the simultaneous use of and diffusion methods, it was compared with the training dataset of — the network's closest approximation to the "real face" source.

The author excluded synthetic datasets that utilized the "not" method, and during the evaluation, the matching rate was reduced due to abnormal distribution of children, as well as non-face images (which often occurs in face datasets, where web scraping systems produce false positives for objects or artifacts with similar facial features).

Calculate the cosine similarity for all retrieved pairs and connect them into a histogram as shown below:

The histogram represents cosine similarity scores calculated across different datasets, along with the similarity correlation values for the top pairs (indicated by the dashed vertical lines).

The quantity of similarity is represented by the spikes in the figure above. This article also provides sample comparisons for six datasets, along with the corresponding estimated images from the original (real) datasets, some of which are selected as follows:

Samples extracted from numerous instances reproduced from the source paper are available for readers to make more comprehensive selections.

The paper comments:

The generated synthetic dataset contains images that are very similar to the training set of its generator model, raising concerns about such identity generation.

The author points out that for this specific method, scaling to larger datasets may not be efficient, as the necessary computations would be extremely burdensome.

They further observed that visual comparison is necessary for inferring matches, and relying solely on automatic facial recognition may not be sufficient for larger tasks.

Regarding the significance of this study and its future development directions, the study points out:

We would like to emphasize that the primary motivation for generating synthetic datasets is to address privacy concerns when using large-scale web-scraped face datasets.

Therefore, the leakage of any sensitive information (such as the identities of real images in the training data) in synthetic datasets raises serious concerns about the application of synthetic data to privacy-sensitive tasks (such as biometrics).

Our research uncovers privacy pitfalls in the generation of synthetic face recognition datasets and paves the way for future research in generating responsible synthetic face datasets.

Although the author promised to release the code for this work on the project page, there is currently no repository link available.

https://www.idiap.ch/paper/unveiling_synthetic_faces/

Recent media attention has focused on the diminishing returns obtained from training AI models using data generated by artificial intelligence.

However, this new study in Switzerland focuses on a question that may be more pressing for the growing number of companies hoping to leverage generative AI and profit from it—patterns of data that are protected by intellectual property or unauthorized still exist, even in datasets designed to combat such practices.

If we must give it a definition, in this case, it might be called "washing the face."

However, the decision to allow users to upload images generated by to actually compromises the legal "purity" of this data. Bloomberg claimed in [month] [year] that images provided by users of the system have been incorporated into the functionality of .